Classifying Birds:

an exercise in yak shaving.

History:

A few years ago, I was having a heated discussion about the daily mail. Mostly the usual bollocks about immigration. This got me thinking, a number of “british” garden birds are infact dirty foreigners (most famously the swift, but also surprisingly the robin too)

This lead me to ask the question: are immigrant birds getting fat from uk taxpayer’s gardeners free handouts? Well, you know, something along those lines anyway. On a more scientific note, I wanted to find out if I could measure the average size of a feeding bird, non-invasively.

The short answer is: no

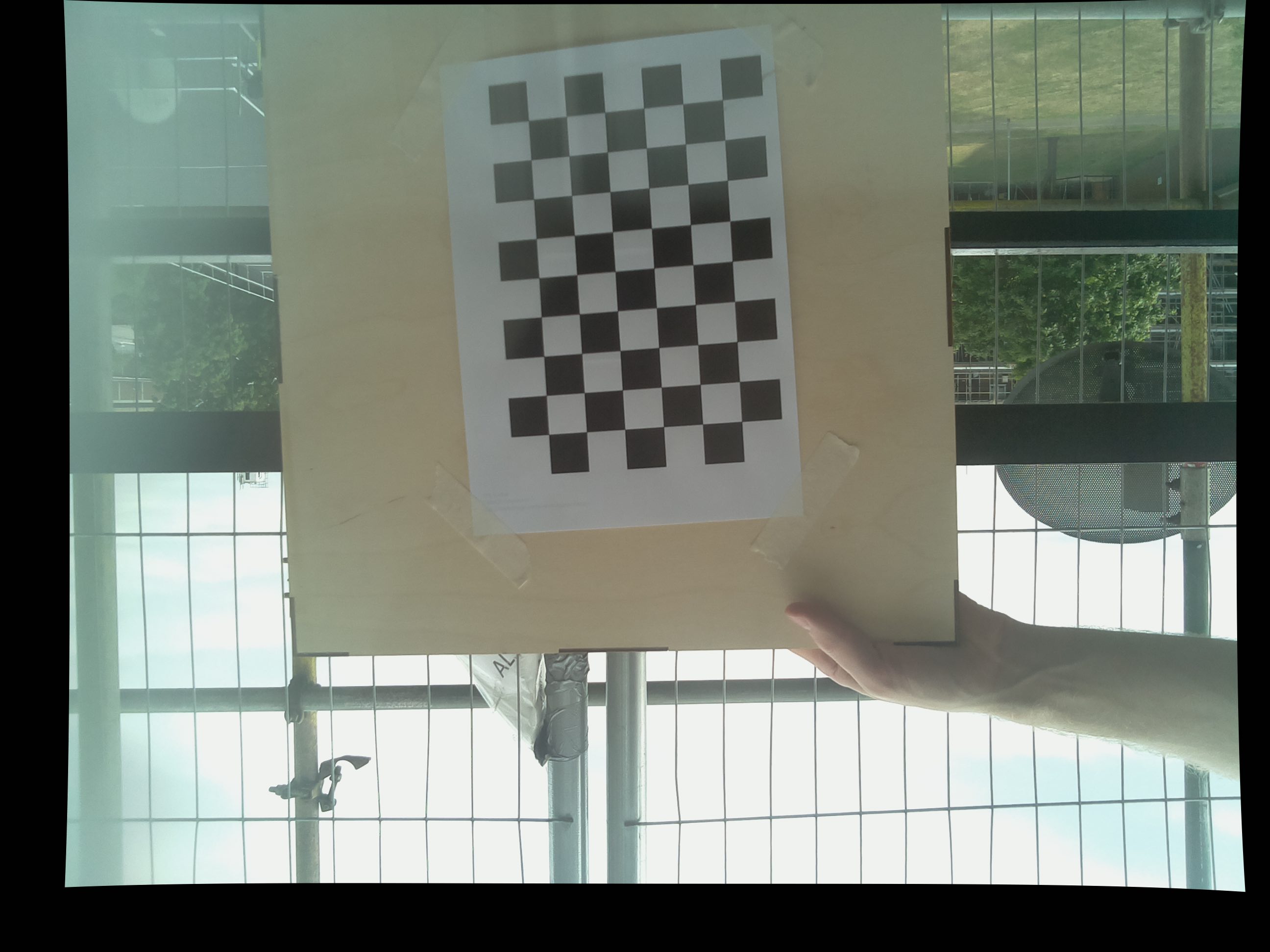

Basically I created a stereo camera pair, mounted and calibrated and tried to re-build a 3d model of things put in front of it. Firstly thats a terrible way to do it, secondly, there is no second.

Despite getting a decent calibration, I wasn’t able to re-build a dense enough point cloud to get a usable model on a chequerboard, let alone a complex smooth organic shape such as a bird.

Despite getting a decent calibration, I wasn’t able to re-build a dense enough point cloud to get a usable model on a chequerboard, let alone a complex smooth organic shape such as a bird.

The project lies dormant.

I still want to know what birds are in my garden

It’s 2015, there are rumblings in the machine learning jungle. Caffe has recently been updated and they provide excellent documentation, and a python interface. However, deep learning is a mystery. I struggle to get BLVC fine tuned on a subset of imagenet.

I have no idea what I’m doing, and I create a model that is significantly less effective than guessing using random numbers. The pre-built model is ok, but doesn’t give specific bird types, just “bird”

Time passes

I attempt again in 2016, nothing really changes, I still really don’t understand what I’m doing. Sigmoid activations and graph decent explanations really don’t help either.

2017

At $work, I was moved onto a partial rebuild on the video production pipeline. This is in its self an exercise is self flagellation. $work only deals with text, and it take a billion inefficient systems to do it. Video is a whole level harder.

Anyway, the reason this came up is due to metadata. Because of the half-arsed way the video pipeline is set up, we loose all metadata when we deliver the final video to the website. (fun fact, its quicker to film a general view of Westminster, than it is to try and find, download and import a previous shot….)

Why is this important? Tagging. $work makes a lot of videos about famous political and business types. To make things searchable these famous people are tagged. In written articles this is simple, as you can just use regex. For video its a manual process.

So fucking what? well, facial recognition is a thing that machine learning can do, a common thing to train them on is celebrities. Politicians are celebs…. how hard can it be?

Signal to noise.

Where I had been going wrong was in the choice of source material. When you “train” or “tune” a model, you are giving the model a selection of pictures that all contain the same thing (either a face, animal or object). These groups are then repeatedly fed into the machine and tested against a picture that is known to contain that object.

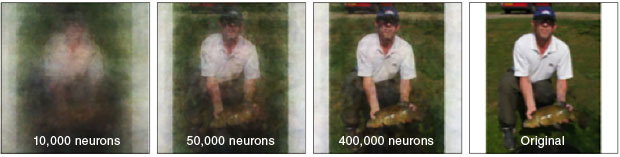

Kinda like this, well not at all. But basically through repetition, the model begins to work out what features of a image define say a face, or a telephone, and how that is different to a tomato.

This means it looks for anything that is common, or repeated. Lets look at an animal example:

In both these picture are uk house sparrows. Good enough for a Machine learning algorithm to pick out right?

In both these picture are uk house sparrows. Good enough for a Machine learning algorithm to pick out right?

WRONG. FUCKING WRONG. This dear reader is the lesson that took me way to long to figure out.

When we look at the image, we instantly see the sparrow, because we are looking for it, and have a very large library of objects we know and recognise. We know that its in grass, and that grass is green, we know that its outside.

The machine knows nothing.

So what’s my point? Every good photo of a uk bird is taken outside, and has lots of surrounding context, trees, grass, snow (for robins). All of these are repeated in all images.

So when the machine is learning what a sparrow is, it sees the grass, compares it to the control image, it matches, bing, move on. It ignores the sparrow as spurious noise.

Crop all the things.

So how/what did I do to make things better?

well this:

Smaller, but much more bird for my buck.

I have a background in VFX, so cropping 10,000 images isn’t as daunting as it first seems.

Results:

it works! The model now takes the above (uncropped picture) and produces this:

Classifying 1040796_tcm9-259518.jpg:

house sparrow: 0.965244054794

###################

chaffinch: 0.0178133118898

wren: 0.00690548866987

dunnock: 0.00443097064272

robin: 0.00376934860833

So now we have the ability to differentiate different species of birds. I now just need to attract them to my bird table….