In 2016, I moved from zone 1 to zone6. This meant that whatcaniseefromtheshard.com had to be turned off, because I’d mounted the camera to my (old) front door. Now, 9 years later I have managed to resurrect the shardcam.

In the old house, I could slap any old camera to my door cam and see the shard. Here in zone 6 land, the shard is ~6 pixels high on the same camera. Therefore I need to do something more clever.

Placement Constraints

The only place I can see the shard is from the roof. This means that I need to have a suitable weatherproof enclosure, that can be mounted on a roof. The roof I can get access to is pitched at about 35-40 degrees, which adds a certain level of difficulty.

Fortunately for me, I already installed a bird table for a previous experiment, so I have something to mount my case too.

Weatherproof case

Previously, I had hot glued a rpi-zero camera case to the inside of a £5 Wilko birdbox. This was to capture birds at a bird feeder. It fits perfectly on the roof mounted bird table. However as I’ve mentioned, even with the higher resolution camera of the pi-cam2 you can’t really see the shard.

Fiddling with optics

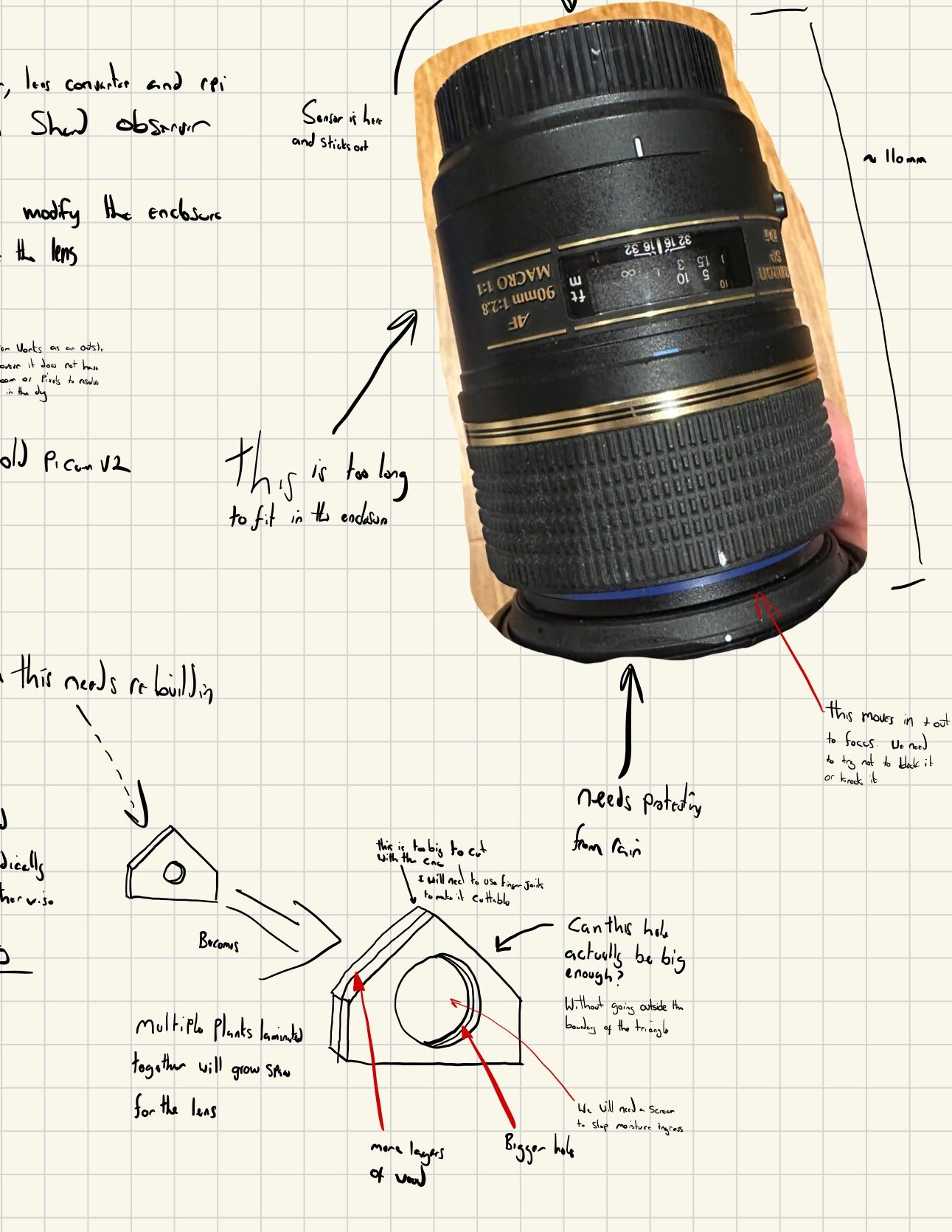

I need some zoom if I’m going to get a good picture of the shard. Now, I could, in theory make my own lens. However I don’t have that much time and I really don’t want to spend it learning optics. I could also mill some adaptors to connect the camera up to a secondhand macro lens I had. Again much more work than I want to do.

I was however in luck as I found a cheap second hand HQ cam on ebay. All I need is an F-mount adaptor and jobs a goodun. This meant the total spend for this project was less than £42.

Why a macro lens? because its focal length was something like 80mm, and it had a manual focus that goes way beyond normal ranges. This is useful because the lens adaptor doesn’t place the sensor at the correct focal point.

However the bigger problem is I now need to house a massive lens in the birdbox. This means I need to make a new faceplate:

I cut out the faceplate and it miraculously fitted on the first go. The lens fits in with a press fit, this is good for stability, but terrible because the focus lock is push activated. It means its a faff to install the lens in the housing.

Software

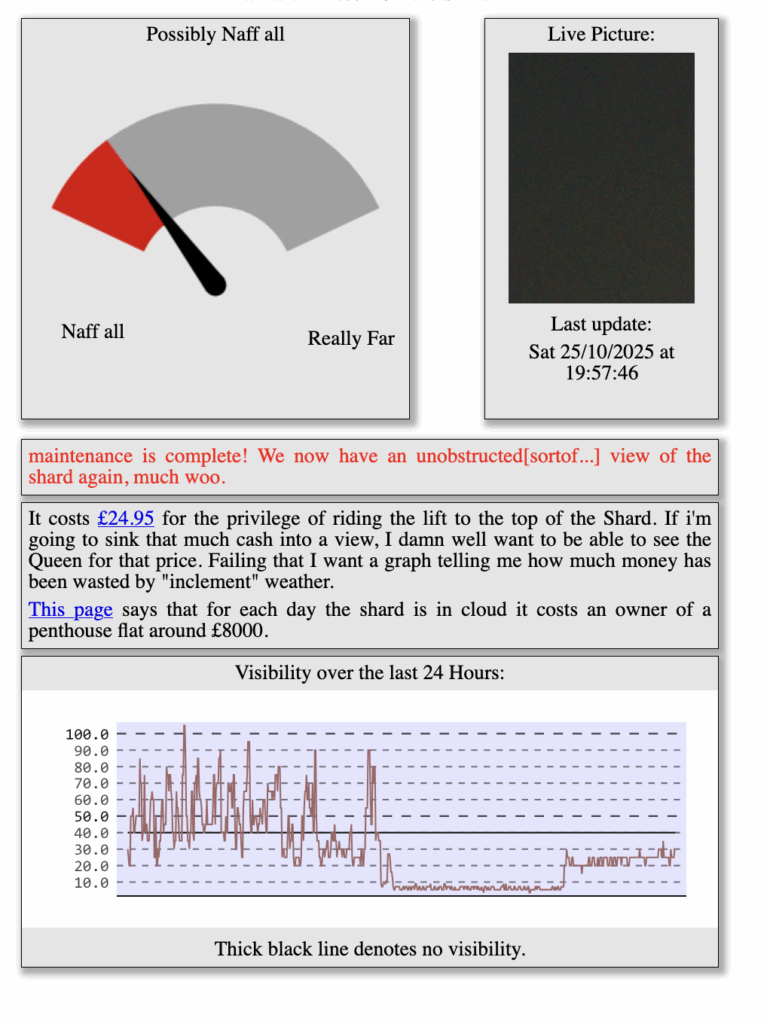

I originally wrote the software over ten years ago. I’m not a computer vision expert, so I found the simplest way to quantify cloudy-ness. (spoiler, using histogram distribution to apply a metric for how cloudy it is.)

This software relied on two things: 1) the position of the shard doesn’t change, 2) nothing else gets in the way of the shard, apart from clouds. However I can’t make those assumptions. The biggest issue is because the shard is so far away, and we are so zoomed in, any kind of movement translates into a massive change in position of the shard.

The bird box is glued to the roof on three sides. Its impervious to wind movements. What I hadn’t realised is quite how much the entire roof moves throughout the day. I assume its because of temperature/humidity changes.

I spent a long time trying to figure out how to create a “shard detector”. I refuse to use ML to do this, because it should be easily to implement with “classical” computer vision. Moreover I don’t have a GPU to spare.

To find the shard I make the assumption that if the shard is visible, it’ll be the only vertical object in view. So I threshold the image to get nice contrast, run an edge detector, and extract contours. Any vertical contour that’s of the right size is assumed to be the shard. It seems to work pretty well.

This means that all I really need to do is use hough transforms to find where the most vertical lines are clustered, and use those coordinates to place the crop for the website.

Website: evolved

The old website was a bit of a mishmash of things, There was a perl script, or perhaps python, that regenerated the website every minute. The shardcam physically pushed the image to the webserver via SSH. It also managed the archive, so I could make a timelapse.

I don’t really want to be scripting ssh from a tiny pi, I think thats asking for trouble. So I’ve created a simple webservice that receives images from the camera, and regenerates the website when a new image is uploaded. Slightly less heath-robinson, but still not elegant.

I now have a proper Time Series Database, so I should really use that to export graphs. But I’m not, so we’ll just ignore that and leave it as is.